Mar. 06, 2025

[Collaborative Research] Automated Detection of Small Birds!Advancing Computer Vision Research through International Conference Competitions

Toyota Motor Corporation's Frontier Research Center is conducting research in computer vision, a field that aims to realize visual systems capable of processing video and image inputs to perceive and understand their surroundings like the human eye. This research encompasses a wide range of applications, including robotics and mobility. In particular, "Small Object Detection," which focuses on accurately recognizing objects that are difficult for visual systems to capture, remains an active academic challenge while simultaneously experiencing a surge in demand for real-world applications, and is attracting attention.

In this article, we interviewed Mr. Yuki Kondo, who served as the Technical Event Chair for the International Conference on Machine Vision Applications (MVA) 2023 and organized a competition on small object detection*1. We discussed the research on small object detection, its potential applications, and the details of the competition.

The Cutting Edge of Small Object Detection

- What is small object detection technology?

- Kondo

"Small object detection" is a technique for identifying and localizing extremely small objects in images and videos, accurately estimating their position, size, and category (class). This task falls under object detection, which itself is a subfield of image recognition, a core domain in computer vision.

Image recognition broadly refers to a technology that identifies and classifies objects, people, text, and actions in images or videos. Object detection extends this capability by not only classifying objects but also determining their location and size within an image. Among object detection tasks, small object detection (as illustrated in Figure 1) specifically focuses on detecting objects that appear very small in pixel size[1], ensuring they can be identified with high precision despite their minimal visual footprint.

Small object detection has a wide range of applications across the following fields:

- Medical field: Analysis of pathological tissues and early cancer detection

- Autonomous driving: Recognition of distant pedestrians and obstacles

- Construction: Automated detection of structural defects

- Security: Identification of suspicious objects in surveillance footage

- Aerospace: Detection of buildings and infrastructure in satellite imagery

By enhancing conventional object detection, small object detection has the potential to provide more precise and comprehensive recognition results, making it a crucial advancement for numerous real-world applications.

-

- Figure 1 Comparison of (a) Object Detection and (b) Small Object Detection Tasks

- [1]

- A commonly used definition of small objects is those with a bounding box area smaller than 322 pixels*3, or with an area ratio to the image size of less than 0.56%*4.

- [2]

- The image*5 is cited in compliance with the CC BY 2.0 license.

- What are the current technical challenges?

- Kondo

-

Like other computer vision tasks, object detection has advanced significantly in recent years due to progress in deep learning. In particular, Convolutional Neural Networks (CNNs)*6 have greatly contributed to improving detection accuracy by effectively capturing local features within images. Meanwhile, Transformers*7 have enabled even more sophisticated detection by effectively capturing global scene information. These technological innovations have led to a dramatic improvement in object detection.

However, despite these advancements, small object detection still faces numerous challenges. Small objects tend to blend into the background and have limited visual features, making them extremely difficult to detect. Additionally, factors such as motion blur and image noise further degrade detection accuracy. These issues are particularly pronounced in dynamic scenes, such as those captured by Unmanned Aerial Vehicles (UAVs), in-vehicle cameras, or handheld devices.

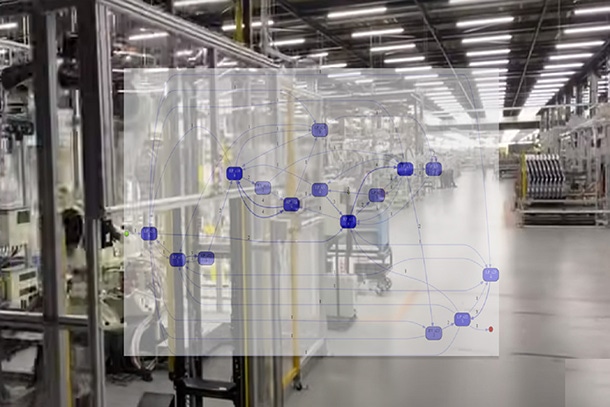

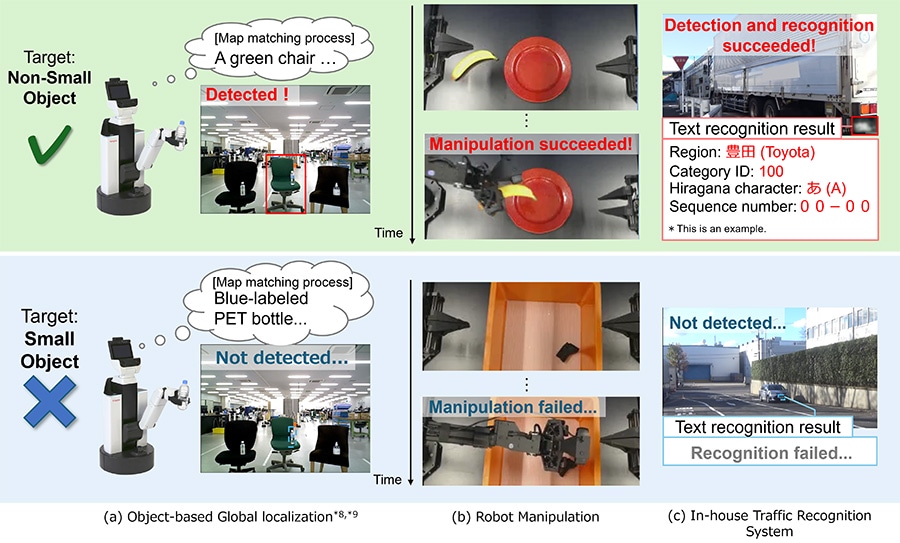

The challenges of small object detection have also become evident in our internal research and development projects. For instance, both object recognition for global localization*8*9 and manipulation in robotics research, as well as license plate recognition developed for optimizing internal traffic flow within the company for internal use only, have been affected by low-resolution conditions (Figure 2).

To address these challenges, we organized a competition as part of a broader "Collaborative Research initiative," aimed at engaging the entire research community in a collective effort to define key issues in the field and drive advancements in small object detection. By identifying and sharing these challenges both internally and externally through the competition, we aim to foster a collaborative ecosystem that accelerates progress in this domain.

-

- Figure 2 Examples of Small Object Detection Failures in Internal R&D Projects

Small Object Detection for Spotting Birds (SOD4SB) Challenge@MVA2023

- Can you tell us about the 2023 competition?

- Kondo

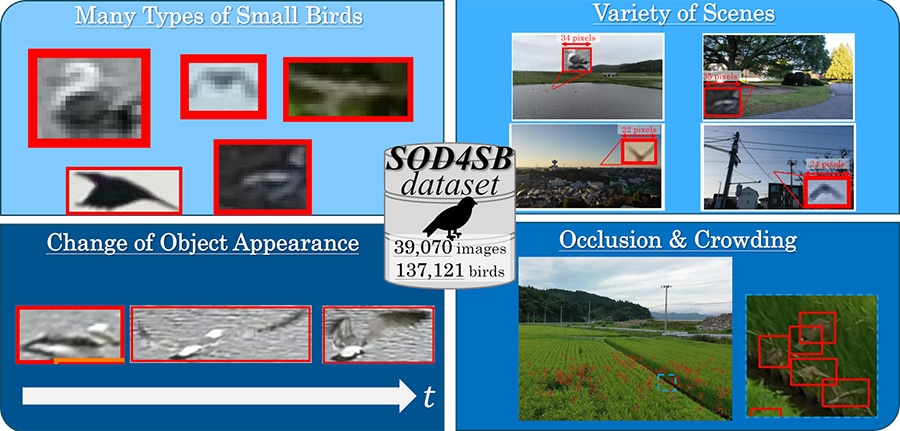

This competition was organized as part of the "MVA2023" international conference. While conventional small object detection tasks have primarily focused on humans and buildings, this competition set a new challenge: detecting birds captured by UAVs. To facilitate this, we developed a new dataset, SOD4SB (Figure 3). This dataset systematically incorporates challenges unique to small object detection for birds and provides a highly challenging task aimed at improving detection accuracy in real-world environments captured by UAVs.

- Diversity of species: Birds exhibit a wide range of appearances across different species

- Diversity of backgrounds: Various environments such as farmland, urban areas, and parks

- Appearance variation: Birds' flapping and other movements cause significant changes in their appearance between frames

- Occlusion and crowding: Birds frequently gather in flocks, leading to mutual occlusion

- Camouflage effects: Birds' appearances often blend into their surroundings

- Motion blur: Image degradation due to the movement of both birds and UAVs

Due to these factors, the SOD4SB dataset also encompasses unresolved academic challenges common to small object detection, with the goal of advancing research in this field. The techniques developed through this challenging task are expected to contribute to the overall improvement of small object detection technologies and lead to the development of more generalized methodologies.

-

- Figure 3 Overview of the SOD4SB Dataset Used in the Competition (Cited from *1)

- What were the steps leading up to the competition?

- Kondo

-

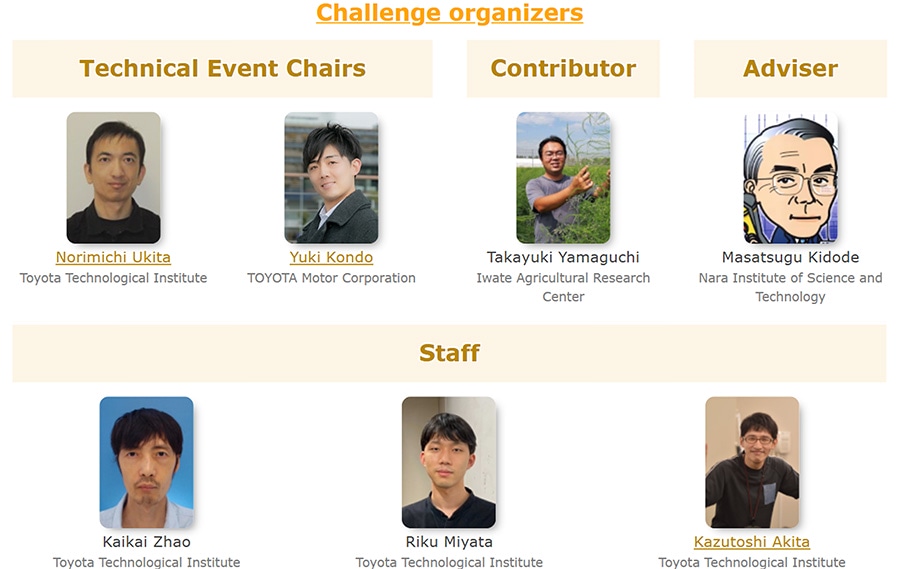

This project was initiated by an invitation from my former supervisor, Prof. Norimichi Ukita from Toyota Technological Institute. Inspired by MVA's mission of "fostering interaction between academia and industry" and aligned with my own mission as an industry researcher, I began exploring the feasibility of organizing a competition that balances both academic and practical value.

At the same time, small object recognition had emerged as a challenge in both my own Spatial Intelligence research*10 and other research projects at the Frontier Research Center. I was also reminded that the bird detection research*11 conducted in Professor Ukita's lab faced similar challenges. Upon reviewing the bird detection dataset, I confirmed that most of the birds met the definition of small objects (as referenced in Note 1 above). Leveraging the assets and insights from this prior research, we decided to organize the competition with a specific focus on small object detection for spotting birds.

To make the competition more challenging and meaningful, we then expanded the dataset by increasing its scale, diversifying the scenes, and reinforcing the challenging aspects mentioned earlier. This led to the development of the SOD4SB dataset.

To prepare a more diverse dataset and explore potential applications of small bird detection, we collaborated with Dr. Takayuki Yamaguchi from Iwate Agricultural Research Center, now from Iwate's Coastal Regional Development Bureau, who has been conducting research on smart agriculture technology using UAVs. As a result, as illustrated in Figure 4 and described below, we were able to explore a wider range of applications than initially anticipated.

- Autonomous UAV systems: Detecting and avoiding bird collisions to ensure safe flight operations

- Agricultural protection systems: Identifying and tracking harmful birds to prevent damage to farmlands and rice paddies

- Automated ecological monitoring systems: Tracking bird populations and movements to support environmental conservation

Furthermore, Prof. Emeritus Masutsugu Kidode from the Nara Institute of Science and Technology (NAIST) joined as an advisor, while researchers and students from Professor Ukita's lab participated as staff members. With a total of seven members, we worked together to push the competition forward (Figure 5).

To maximize the impact of the competition, we benchmarked globally recognized competition platforms such as Kaggle and SIGNATE, while also studying insights from competitions held at top-tier international conferences, including The Conference on Computer Vision and Pattern Recognition (CVPR), The European Conference on Computer Vision (ECCV), and The International Conference on Computer Vision (ICCV). Based on these findings, we implemented the following:

- Offering incentives such as peer-reviewed paper acceptance, free invitations to international conferences, cash prizes, and certificates

- Providing a baseline model to facilitate easy participation

- Establishing a Discord platform for participants to discuss and collaborate

- Using MVA's official social media accounts to share real-time updates on competition progress

-

- Figure 4 Real-world applications of small bird detection (Materials sourced from *12)

-

- Figure 5 MVA2023 SOD4SB Challenge Committee Members (Cited from the MVA2023 Challenge Website*13)

- Can you tell us more about the outcomes of the competition?

- Kondo

-

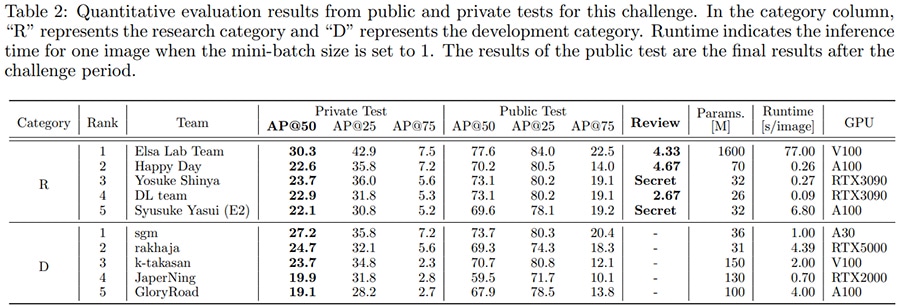

A total of 223 participants joined the competition, submitting 1,045 entries by the end of the event. The competition attracted a diverse group of participants from both academia and industry, spanning multiple countries. This broad participation was in line with the competition's goal of posing academic challenges in computer vision while encouraging the development of practical solutions applicable to real-world scenarios. As a result, numerous refined small object detection methods were proposed. At the conference, the top four winners presented their approaches in the Technical Event session (Figure 6).

For quantitative evaluation, the submitted models demonstrated substantial improvements over the baseline model provided by the organizers, as illustrated in Figure 7. Notably, the winning team, Elsa Lab Team led by H. Y. Hou*14, achieved significant gains in the AP50 metric, improving from 46.4 to 77.6 on the Public Test and from 15.4 to 30.3 on the Private Test compared to the baseline model.

All top-performing teams introduced novel and effective methodologies, incorporating ensemble strategies*14, data augmentation techniques*1*14, and multi-scale feature representations*1*15. Additionally, some teams addressed critical open problems in small object detection, including the proposal of a novel evaluation metric specifically designed for small object detection*16, contributing unique insights into the field.

The baseline code, dataset, and benchmarking system developed for this competition remain publicly available*13, allowing researchers and engineers to freely utilize them for further method development and performance evaluation. We anticipate that this foundation will continue to advance small object detection technology and expand its real-world applicability.

-

- Figure 6 An oral presentation by a top four winner in the Technical Event session

-

- Figure 7 Competition winner results (Cited from *1)

Conclusion

- What are your future plans?

- Kondo

-

We are currently organizing the "Small Object Tracking for Spotting Birds (SMOT4SB) Challenge"*17 at MVA2025 as an extension of SOD4SB. This competition advances the task from single-image detection to "multi-object tracking across video frames. As shown in Video 1, SMOT4SB presents an even more challenging and practical task than SOD4SB, designed to facilitate technological deployment in dynamic environments. We encourage researchers and engineers to visit the MVA2025 official challenge website for further details and to participate.

Moving forward, we will continue these initiatives to advance small object recognition technologies and promote their real-world applications both within and beyond our organization. Ultimately, we aim to contribute to solving challenges in society and creating new value through this work.

- Video 1 Example of multi-object tracking for birds in the SMOT4SB Challenge (Cited from the MVA2025 Challenge Site*15)

- What makes the Frontier Research Center an attractive place for research?

- Kondo

- At the Frontier Research Center, researchers, including early-career professionals, are given the autonomy and flexibility to actively participate in both research activities and the organization of international conferences. This high degree of independence fosters an environment where individuals can take on challenges with a great deal of freedom. Our center encourages research that pursues academic excellence while also emphasizing real-world implementation, with a focus on developing "human-centered systems." If you are interested in contributing to both foundational research and its practical applications, we hope you will join us in creating the future of technology at the Frontier Research Center.

Author

Yuki Kondo joined Toyota Motor Corporation in 2013. After graduating from Toyota Technical Skills Academy High School course in 2016, he worked in engine development. In 2018, he enrolled at Toyota Technological Institute through Toyota's internal academic advancement program. During his time there, he conducted research in computer vision, focusing particularly on super-resolution for recognition tasks, and received several awards, including the MVA'21 Best Practical Paper Award. After graduating from Toyota Technological Institute in 2022, he returned to Toyota Motor Corporation, where he has been engaged in robotics vision and computer vision research. Currently, as a member of the Spatial Intelligence Research Group, he is conducting research on image stitching and small object recognition, while also taking part in robotics autonomous navigation research.

References

| *1 | Y. Kondo, N. Ukita, T. Yamaguchi, H.-Y. Hou, M.-Y. Shen, C.-C. Hsu, E.-M. Huang, Y.-C. Huang, Y.-C. Xia, C.-Y. Wang, C.-Y. Lee, D. Huo, M. A. Kastner, T. Liu, Y. Kawanishi, T. Hirayama, T. Komamizu, I. Ide, Y. Shinya, X. Liu, G. Liang, and S. Yasui, "MVA2023 Small Object Detection Challenge for Spotting Birds: Dataset, Methods, and Results," in Proceedings of the 18th International Conference on Machine Vision and Applications (MVA), 2023. |

|---|---|

| *2 | T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, "Microsoft COCO: Common Objects in Context," in Proceedings of the European Conference on Computer Vision (ECCV), 2014. |

| *3 | A. Torralba, R. Fergus, and W. T. Freeman, "80 million tiny images: A large data set for nonparametric object and scene recognition," IEEE transactions on pattern analysis and machine intelligence (PAMI), vol. 30, no. 11, pp. 1958-1970, 2008. |

| *4 | C. Chen, M.-Y. Liu, O. Tuzel, and J. Xiao, "R-cnn for small object detection," in Proceedings of the Asian Conference on Computer Vision (ACCV), 2017. |

| *5 | Dirvish, "Husky Boarding," Flickr, CC BY 2.0 License., https://www.flickr.com/photos/dirvish/2273154848/. [Accessed: Feb. 5, 2025]. |

| *6 | Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, "Gradient-based learning applied to document recognition," Proceedings of the IEEE, vol. 86, no. 11, pp. 2278-2324, 1998. |

| *7 | A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, "Attention is All You Need," in Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), 2017. |

| *8 | S. Matsuzaki, T. Sugino, K. Tanaka, Z. Sha, S. Nakaoka, S. Yoshizawa and K. Shintani, "CLIP-Loc: Multi-modal Landmark Association for Global Localization in Object-based Maps," in Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), 2024. |

| *9 | S. Matsuzaki, K. Tanaka and K. Shintani, "CLIP-Clique: Graph-Based Correspondence Matching Augmented by Vision Language Models for Object-Based Global Localization," IEEE Robotics and Automation Letters, vol. 9, no. 11, pp. 10399-10406, 2024. |

| *10 | Toyota Motor Corporation, "Research on Spatial Intelligence", https://global.toyota/en/mobility/frontier-research/40802913.html. [Accessed: Feb. 5, 2025]. |

| *11 | S. Fujii, K. Akita, N. Ukita, "Distant Bird Detection for Safe Drone Flight and Its Dataset", 17th International Conference on Machine Vision and Applications (MVA), 2021. |

| *12 | いらすとや (Irasutoya), "Free illustration websites", https://www.irasutoya.com/. [Accessed: Mar. 20, 2023]. |

| *13 | MVA2023, "Small Object Detection Challenge for Spotting Birds 2023," https://www.mva-org.jp/mva2023/index.php?id=challenge. [Accessed: Feb. 5, 2025]. |

| *14 | H.-Y. Hou, M.-Y. Shen, C.-C. Hsu, E.-M. Huang, Y.-C. Huang, Y.-C. Xia, C.-Y. Wang, and C.-Y. Lee, "Ensemble fusion for small object detection," in Proceedings of the 18th International Conference on Machine Vision and Applications (MVA), 2023. |

| *15 | D. Huo, M. A. Kastner, T. Liu, Y. Kawanishi, T. Hirayama, T. Komamizu, and I. Ide, "Small object detection for bird with swin transformer," in Proceedings of the 18th International Conference on Machine Vision and Applications (MVA), 2023. |

| *16 | Y. Shinya, "BandRe: Rethinking band-pass filters for scale-wise object detection evaluation," in Proceedings of the 18th International Conference on Machine Vision and Applications (MVA), 2023. |

| *17 | MVA2025, "Small Multi-Object Tracking for Spotting Birds (SMOT4SB) Challenge 2025," https://mva-org.jp/mva2025/index.php?id=challenge. [Accessed: Feb. 5, 2025]. |

Contact Information (about this article)

- Frontier Research Center

- frc_pr@mail.toyota.co.jp

![[Collaborative Research] Automated Detection of Small Birds!](/pages/global_toyota/mobility/frontier-research/2025/03/06/1300/20250306_01_kv_w1920.jpg)